Autonomous Off-Road Driving

I will continue to update this as I develop more cohesive results and figures

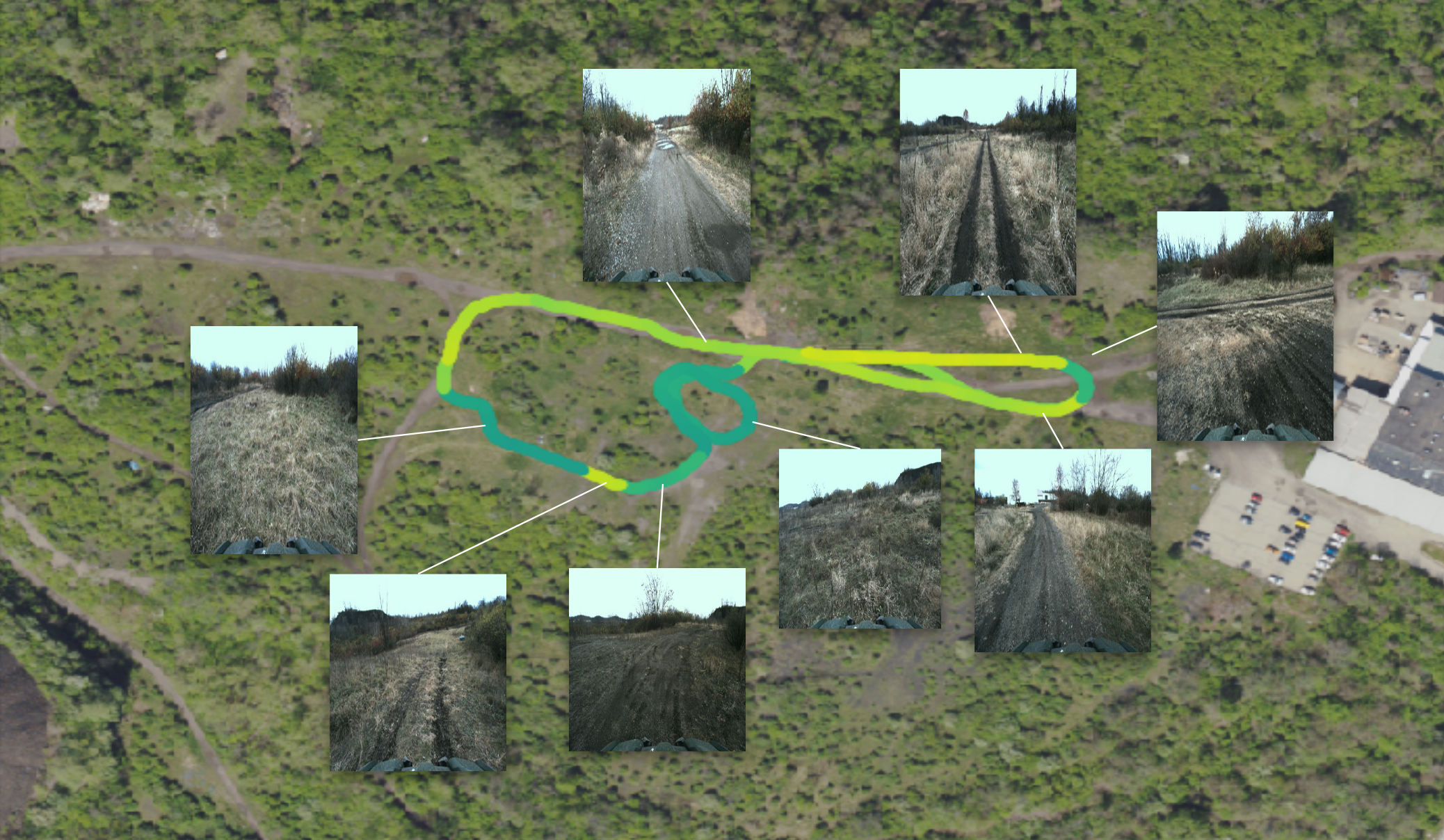

My research for my Master’s thesis centers around the following concepts under the context of off-road driving:

- Self-supervised learning - leveraging cues intrinsic to collected data (e.g. expert demonstration, proprioceptive cues) rather than expensive manual labels

- Online adaptation - designing an autonomous system that can adapt its behavior as it experiences previously-unseen terrain

- Multi-modal representation learning - using different sensors simultaneously to perceive geometric, semantic, and other information in the environment

Recently, I’ve been exploring how to use generalized features from visual foundation models to influence adaptive behaviors. The strong feature extractors allow us to perform terrain segmentation and uncertainty detection without labelling any of our own data. Coupling this with proprioceptive information such as IMU data allows us to quickly learn about the difficulty/roughness of terrain we haven’t seen before.

Autonomous High-Speed Racing

I’ve been a member of the MIT-PITT-RW racing team since 2020, where I’ve been working on perception algorithms and serving as a mentor to younger students.

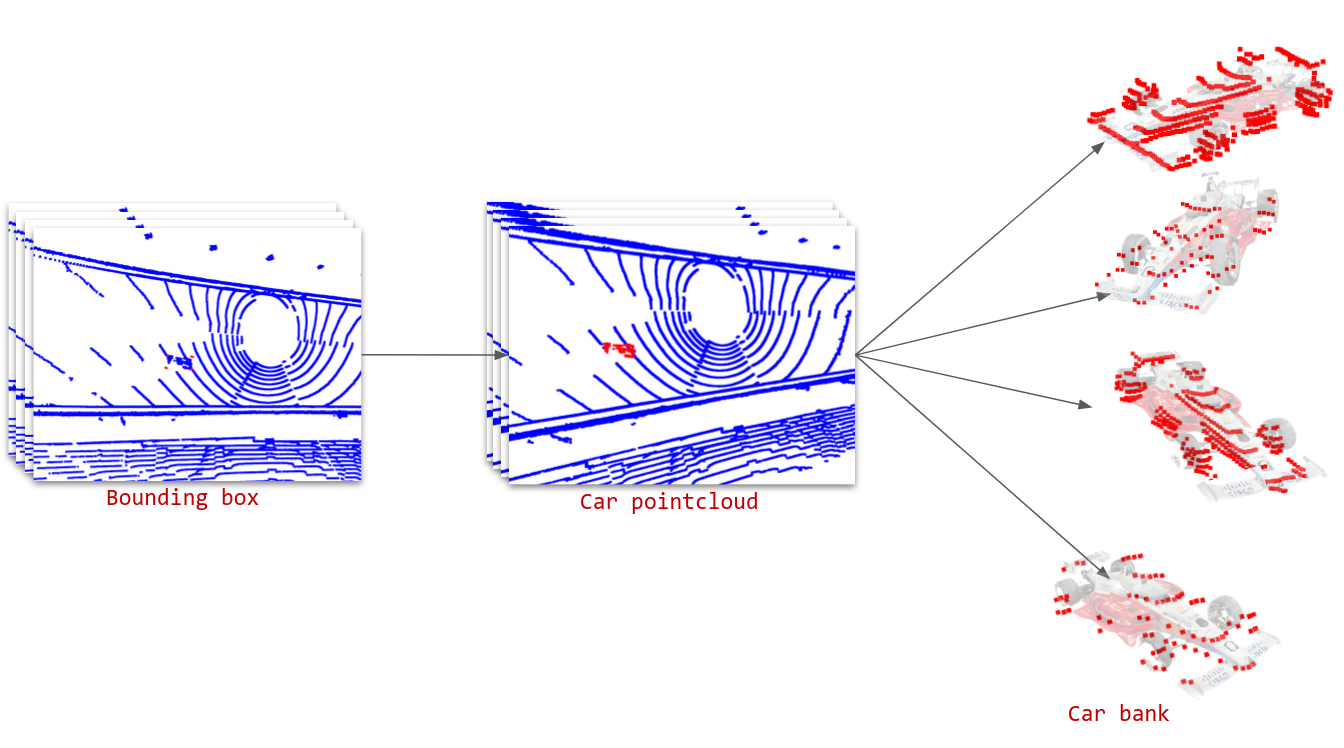

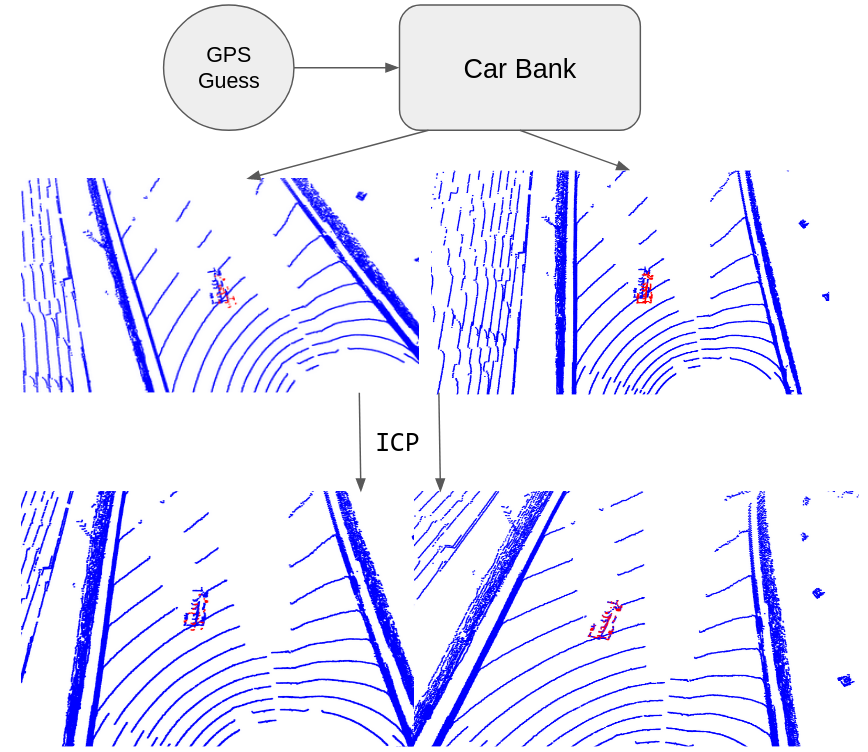

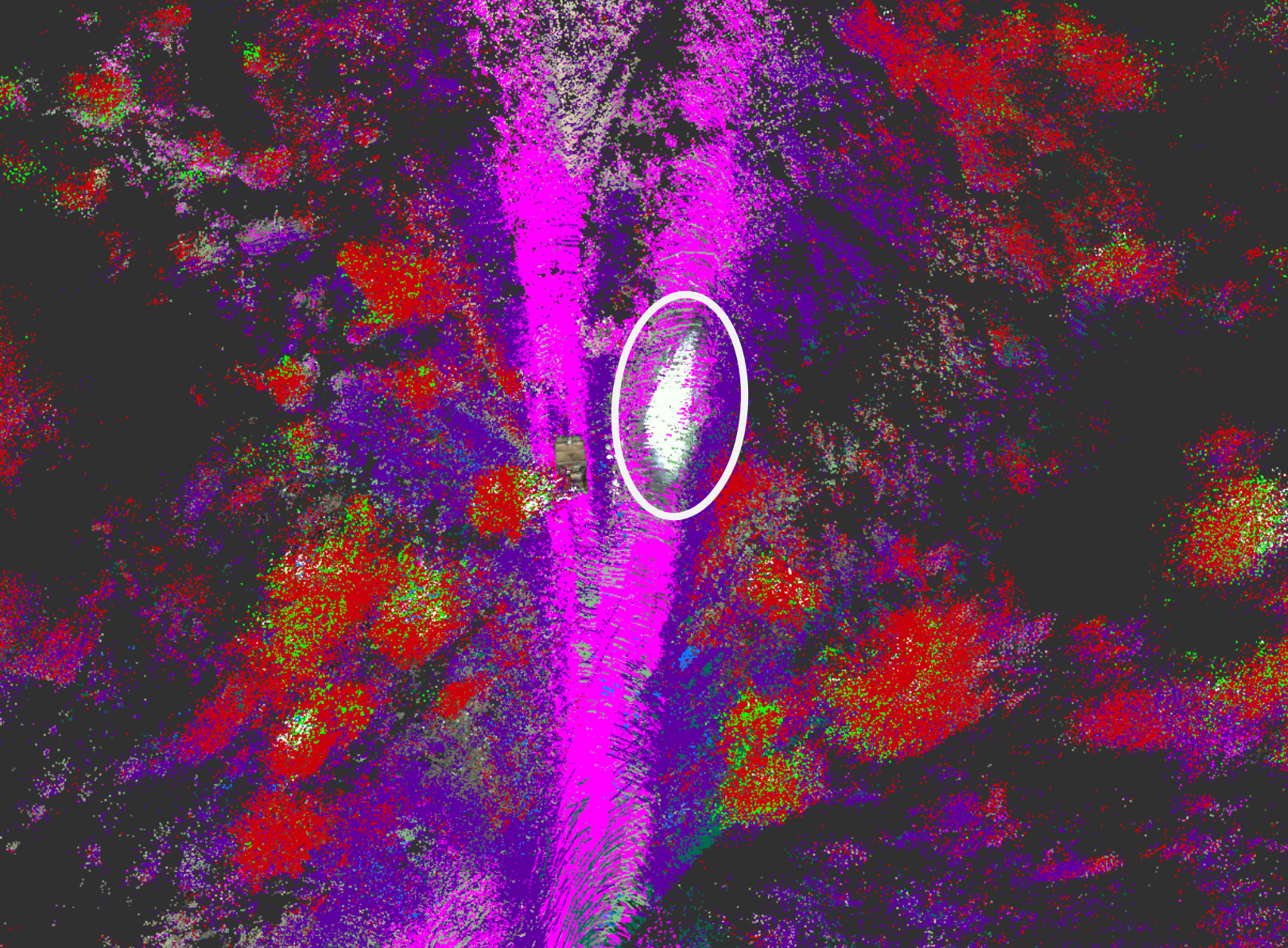

One effort I’ve been working on recently is reducing the number of manual labels needed to train our object detection networks. I’ve set up a pipeline that uses the few manual labels that we have to generate a “bank” of example pointclouds of the enemy car at different ranges. We then use the GPS information from the other car (we have access to other teams’ data post-race) to act as a guess to initializ ICP between cars in the car bank and a new unlabeled pointcloud. The result is an automatic labelling pipeline.