Many downstream tasks in robotics rely on having a prior map, such as path planning, intelligent obstacle avoidance, or computationally expensive inspection of an environment that can’t be done online. To create maps efficiently, individual observations from multiple robots can be aggregated into one large, dense map. However, it can be difficult to align maps from different robots and, when discrepancies between maps arise, determine which is correct. We propose a robot-agnostic algorithm to generate high fidelity point cloud maps that incorporates automatic registration and reconciles disparities by generating confidence maps for each robot. We validate our algorithm by running it in real time with maps generated by a ground and aerial vehicle.

The end result is a framework that allows us to insert dense pointclouds from a ground vehicle into a sparser map generated by an overhead drone. Our code is available here.

The full report for my SLAM class is available at the bottom but the overall flow of the project is as follows:

Approach

Hardware

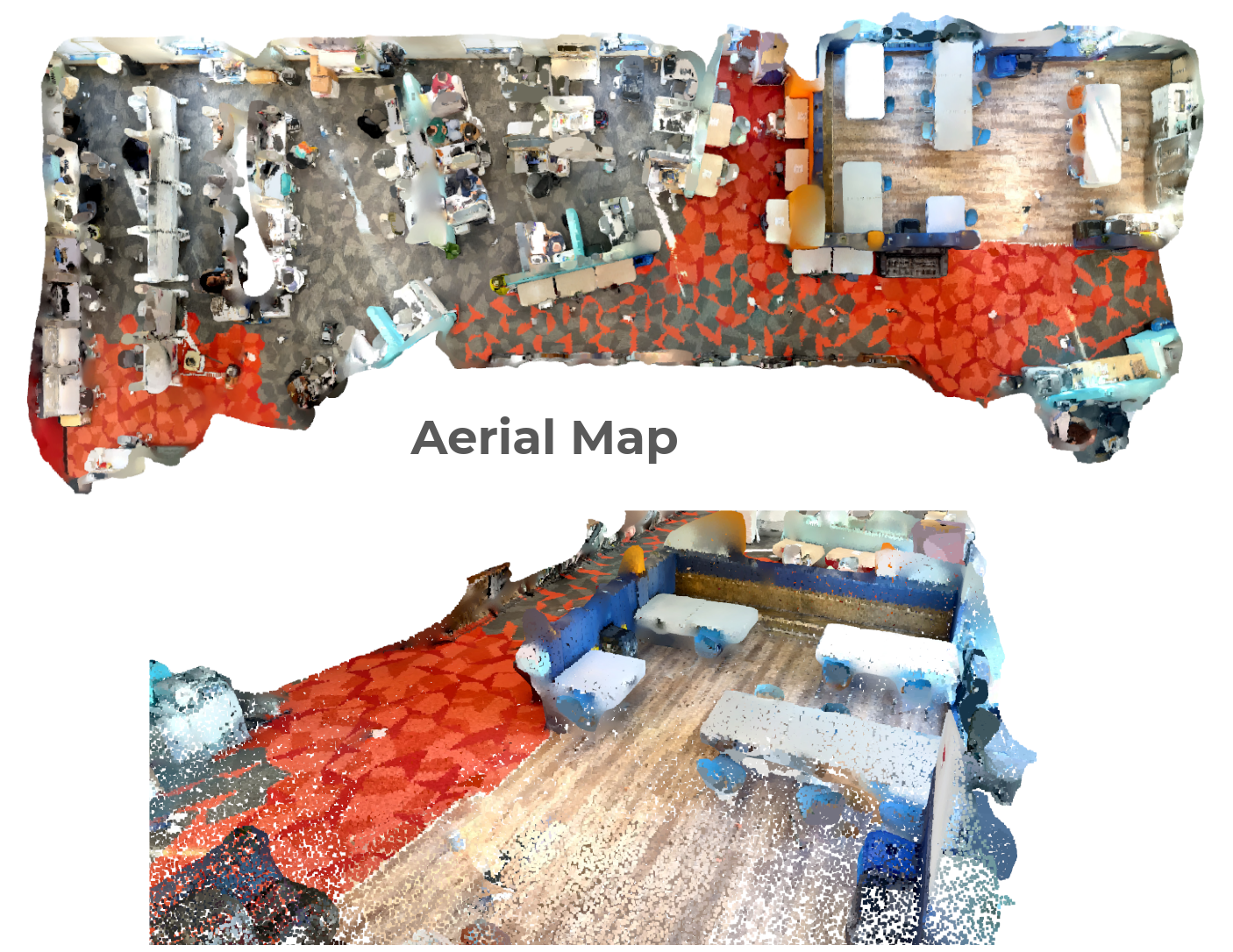

For simplicity, we emulate a drone by recording a pointcloud from an iPhone lidar pointed downwards:

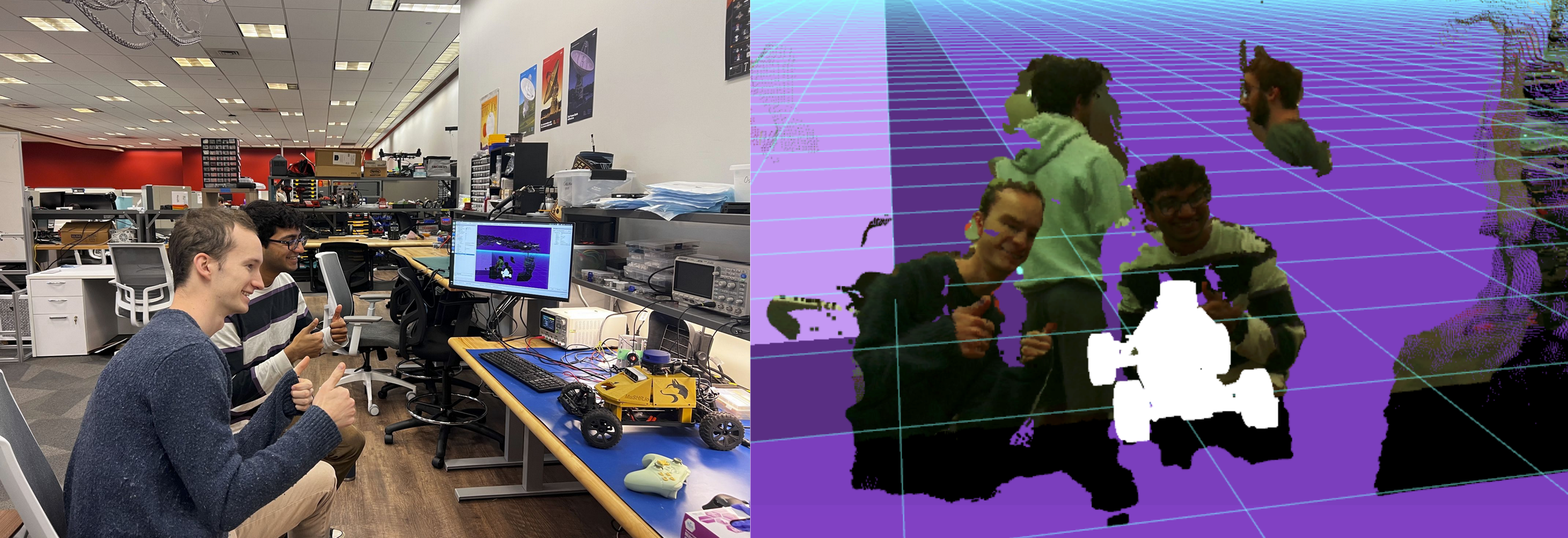

For our ground vehicle we originally planned to use a realsense on a Mushr robot as shown below, but ended up using the iPhone lidar again except from a low angle and pointed forward.

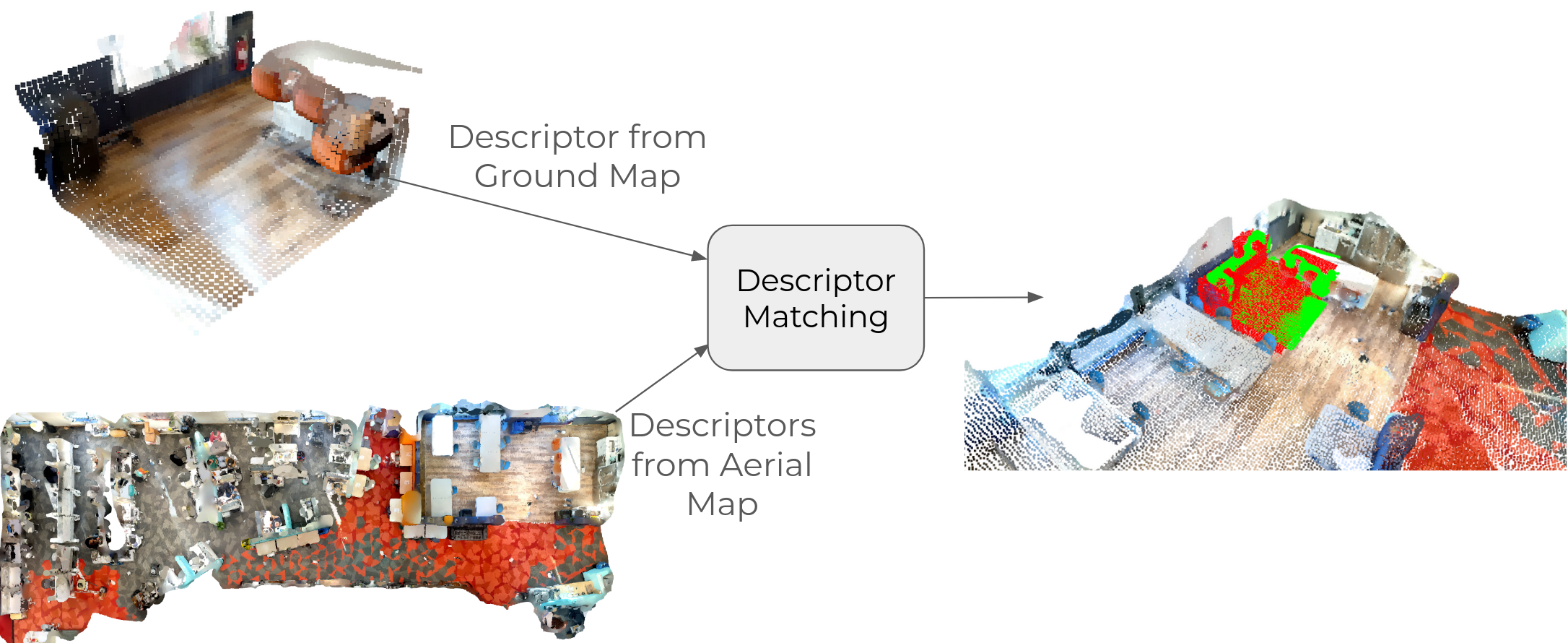

Descriptor Matching

Offline Descriptor Generation

Offline, we generate descriptors for 3x3m patches of the aerial map, where the descriptor is computed by binning the patch by height and computing information such as proportion, standard deviation, and color for each bin.

Online Descriptor Matching

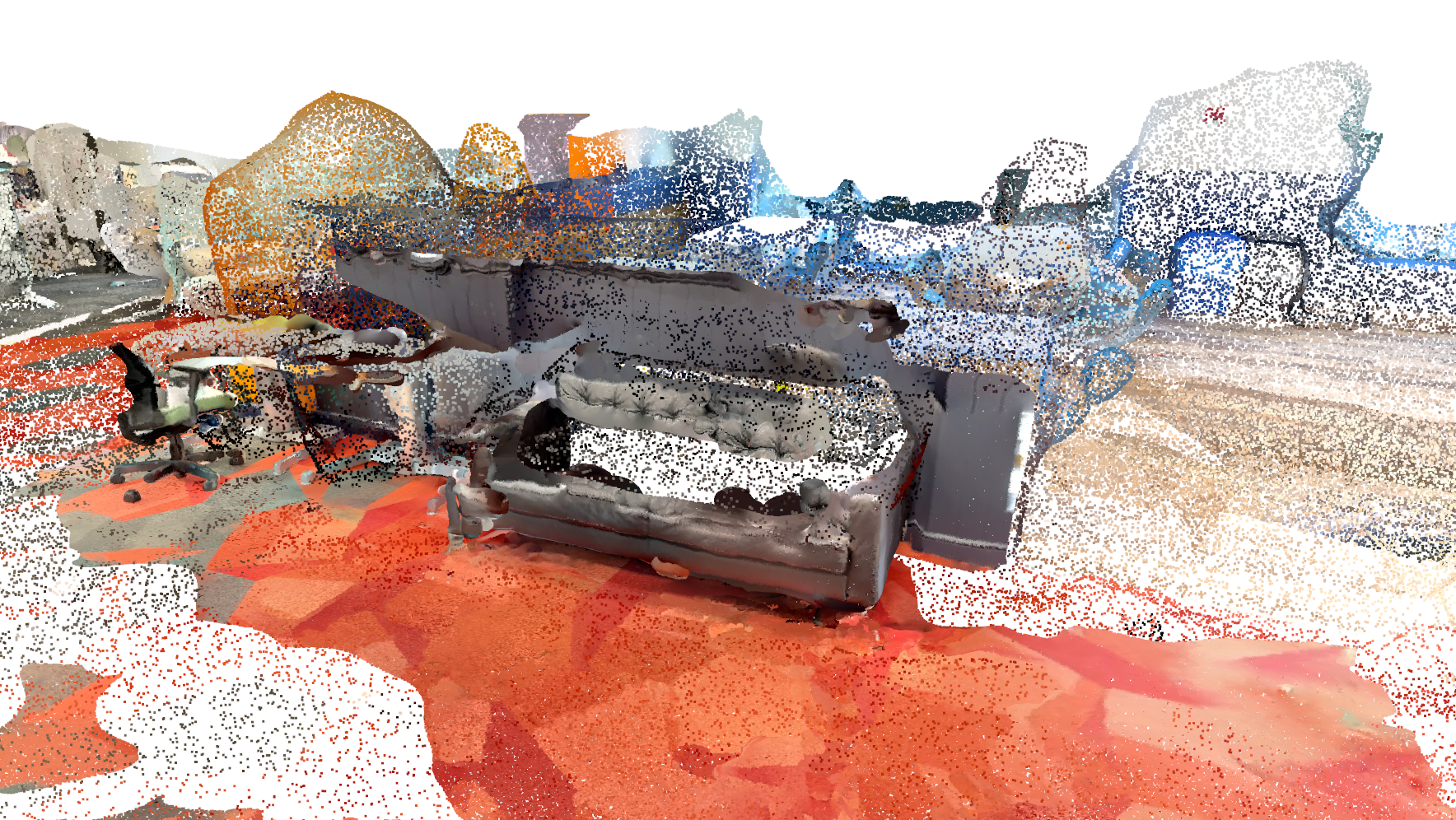

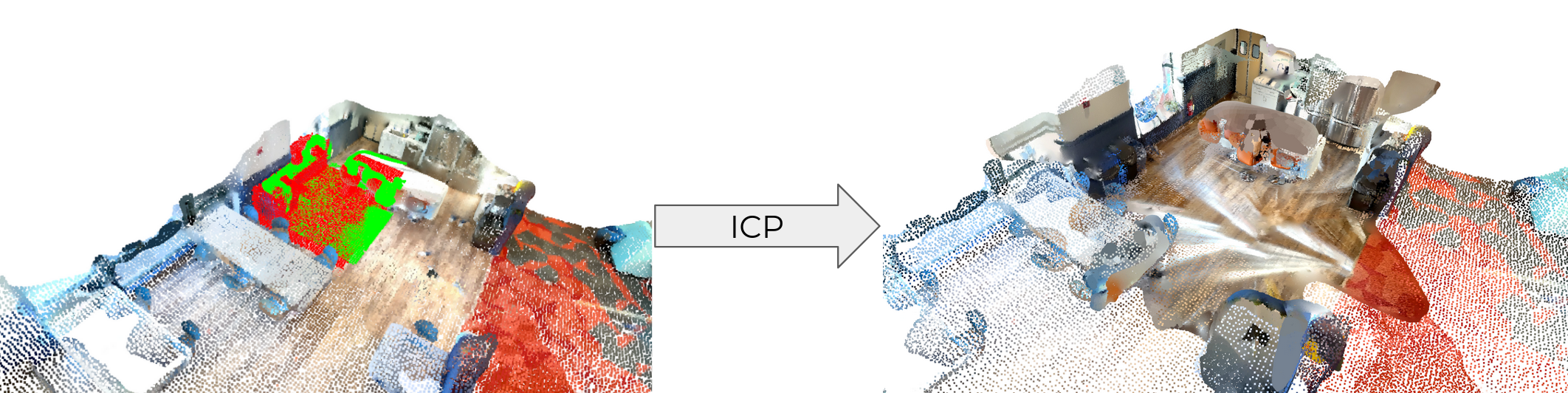

Online, we observe a 3x3m patch of pointcloud collected from the ground vehicle and compute its descriptor. We find the closest descriptor from the aerial map and use this as an initial guess as to where the ground robot is in the aerial map frame. We then use ICP to refine this guess and register the vehicle cloud into the drone cloud. This results in a much denser map, but introduces some discrepancies, as both the drone and vehicle have inaccuracies due to occlusions.

Discrepancy Resolution

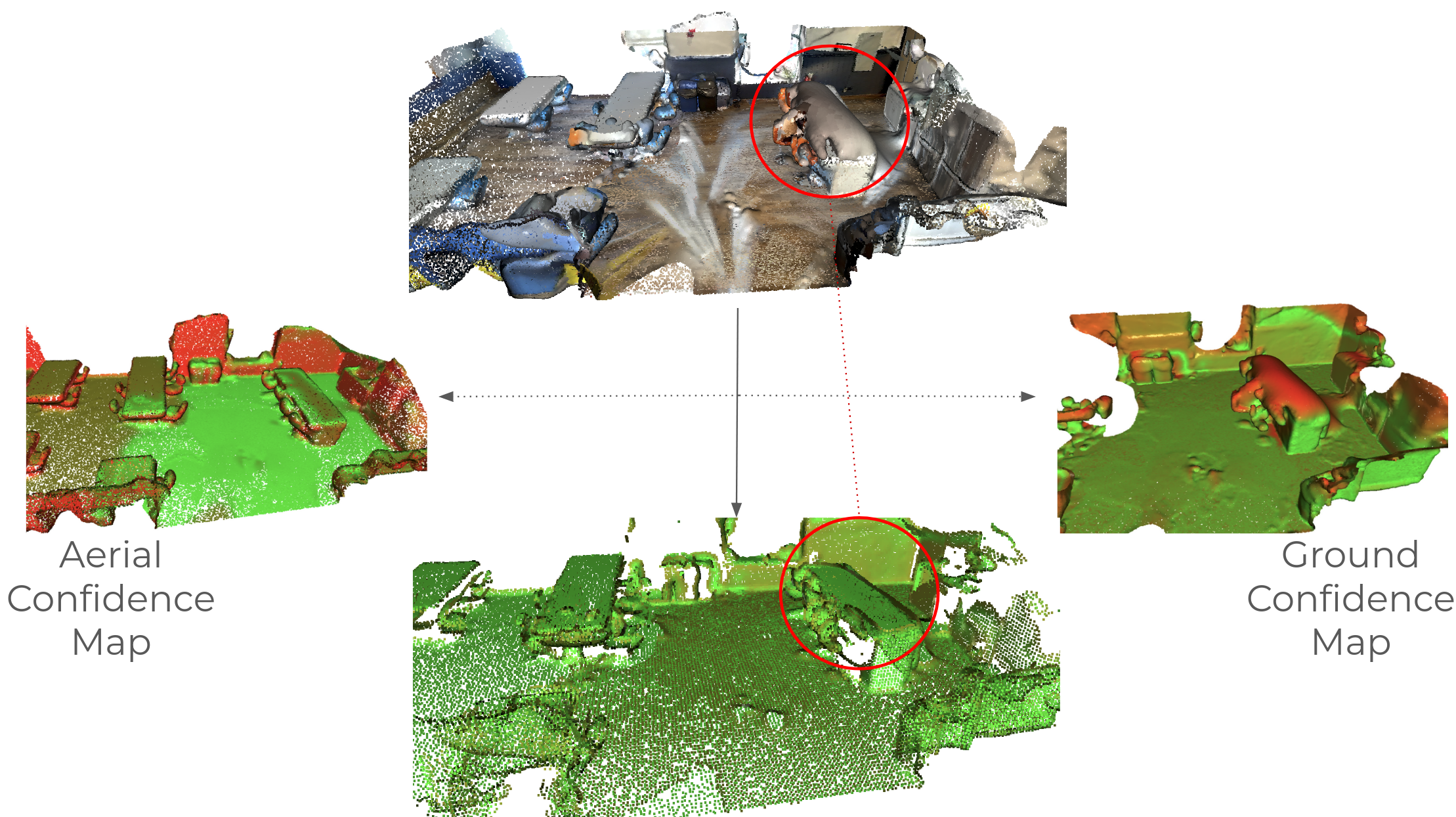

As a heuristic, we assume that a vehicle can be more confident about points with surface normals parallel to the viewpoint of the vehicle. For example, the drone can be more confident about tabletops since the surface is perpendicular to its top-down view. To clean up the map, we detect discrepant points and then use this heuristic to determine which viewpoint is more likely to be correct

Results

By fusing the two maps we get much denser maps. As you can see below, there is a significant difference in density between areas where we get coverage from both vs just the aerial map alone.